How to Build an AI App: A Practical Guide

Learn how to build an AI app from concept to launch. This guide covers idea validation, tech stacks, MVP development, and monetization for your AI project.

How to Build an AI App: A Practical Guide

Building a successful AI app involves a clear, repeatable process: first, validate a real-world problem that users will pay to solve. Next, create a detailed plan, select the right tech stack (like Next.js and an OpenAI API), and then develop a focused Minimum Viable Product (MVP). Finally, deploy the app, choose a monetization model, and iterate based on user feedback. This guide provides a step-by-step roadmap to take your concept from idea to a market-ready application.

Your Blueprint for a Successful AI App

This guide will walk you through the entire process of building an AI application, from initial concept to a launched, revenue-generating product. The journey follows a structured path designed for efficiency and market validation.

- Step 1: Idea Validation & Planning: Confirm there is a genuine market need for your app and create a detailed Product Requirements Document (PRD) to guide development.

- Step 2: Technology Selection: Choose the appropriate front-end, back-end, AI models (like GPT-4), and hosting services for your project.

- Step 3: MVP Development: Build the core, essential features of your application to create a functional first version for user testing.

- Step 4: Deployment & Launch: Make your application live and accessible to your target audience with a clear go-to-market strategy.

- Step 5: Monetization & Scaling: Implement a pricing model to generate revenue and scale your infrastructure to support a growing user base.

The Modern AI App Development Landscape

Not too long ago, building an AI app felt like a privilege reserved for machine learning PhDs or giant tech corporations. That's all changed. Thanks to accessible APIs and powerful frameworks, entrepreneurs and developers can now create sophisticated applications that solve real problems.

The world of AI app development is moving at an incredible pace, driven by explosive market growth and tools that speed everything up. This rapid expansion is happening across countless industries, creating a huge demand for AI-powered solutions.

As a result, building and launching an app has become dramatically faster and more cost-effective. The global AI market is projected to skyrocket from around $372 billion in 2025 to a staggering $2.4 trillion by 2032—that's more than a sixfold increase. At the same time, tools like GitHub Copilot and Amazon CodeWhisperer are helping developers slash routine coding time by as much as 50%. This means MVP cycles that used to take months can now be compressed into just a few weeks. For a deeper look into what's coming, you can read the full research on AI app development in 2025.

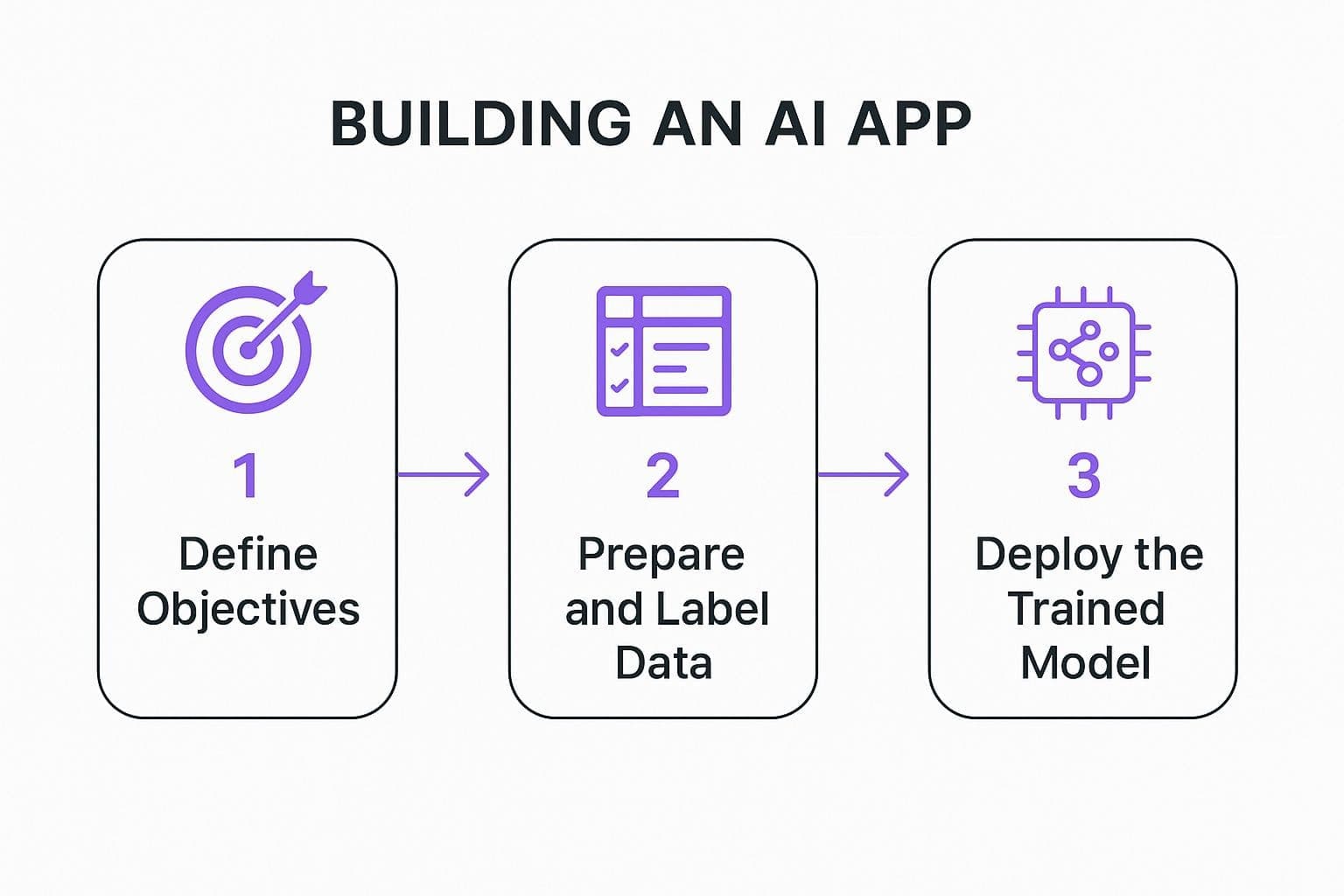

The infographic below breaks down the fundamental steps of the AI development lifecycle, from setting your goals all the way to deploying a finished model.

As you can see, a successful AI project is about much more than just code. It's a strategic process that starts with clear objectives and depends on well-prepared data to succeed.

To give you a clearer picture of the path ahead, here's a high-level look at the stages we'll be covering.

AI App Development Roadmap at a Glance

This table summarizes the core stages involved in building and launching an AI application, from the initial spark of an idea to a full-fledged market entry.

| Stage | Key Objective | Primary Outcome |

|---|---|---|

| Idea & Validation | Confirm a real market need for your solution. | A validated problem statement with evidence of user demand. |

| Product Planning | Define what to build and for whom. | A detailed Product Requirements Document (PRD). |

| Tech Stack Selection | Choose the right tools for development. | A defined stack of frameworks, APIs, and hosting solutions. |

| MVP Development | Build the core features of the application. | A functional Minimum Viable Product ready for user testing. |

| Deployment & Launch | Make the app live and accessible to users. | A deployed application with a clear go-to-market strategy. |

| Monetization | Implement a strategy to generate revenue. | A chosen pricing model and integrated payment system. |

This roadmap provides the structure you need to move from one phase to the next with confidence.

Demystifying the AI Tech Stack

Next up, we'll break down the tech stack—from the front-end frameworks your users will interact with to the core AI models like GPT-4 that power the magic. Choosing the right technologies is a huge decision that impacts everything from user experience to how easily you can scale later on.

A typical stack for a modern AI "wrapper" app usually includes a few key components:

- Front-End Framework: Tools like React or Next.js are the go-to choices for creating a snappy, interactive user interface that feels modern and fast.

- Back-End Logic: You'll need a server-side language like Node.js or Python to handle user requests, manage data, and talk to the AI model's API.

- AI Model API: This is the heart of your app. It's your connection to a Large Language Model (LLM) like OpenAI's GPT-4, Anthropic's Claude, or Google's Gemini.

- Database and Hosting: You'll need somewhere to store user data and a platform like Vercel or AWS to deploy your application so it's live for the whole world to see.

The best way to make an AI system safe is by iteratively and gradually releasing it into the world, giving society time to adapt and co-evolve with the technology, learning from experience, and continuing to make the technology safer.

Finally, we'll get into deployment and monetization, helping you launch your app and grow it into a sustainable business. By following this blueprint, you'll be well-equipped to move from an abstract idea to a concrete, market-ready AI application.

Validating Your Idea and Crafting a Plan

So you've got a brilliant concept for an AI app. That's the easy part. The real work begins now, turning that spark of an idea into a validated, actionable plan. This is where we get brutally practical, making sure there's a real audience with a real problem that your app is uniquely positioned to solve.

The single biggest mistake I see founders make is skipping this step entirely. Enthusiasm is a powerful motivator, but it can trick you into diving straight into code. Building a product nobody actually needs is a costly and soul-crushing detour. Real momentum comes from proving your assumptions before you write a single line of code.

Finding Your Market Fit

Validation isn't about asking your friends if they like your idea—it's a methodical hunt for a genuine market need. The goal is to uncover a specific, painful problem that your target audience is already trying to solve and, crucially, is willing to pay to fix.

You have to get out of your own head and talk to potential users. I can't stress this enough. Real conversations will uncover insights you'd never find staring at a screen.

Here are a few proven ways to do it:

- Customer Interviews: Find 10-15 people who fit your target demographic and have structured conversations. The key is to focus on their current frustrations and workflows, not your solution. Ask open-ended questions like, "What's the hardest part of managing your social media content?" to get to the heart of their pain points.

- Competitor Gap Analysis: Take a hard look at the existing tools in your niche. What are users complaining about in reviews or on social media? These gaps often reveal an underserved slice of the market your AI app could nail.

- Landing Page Test: Spin up a simple one-page website that clearly explains your app's value. Run a small, targeted ad campaign to drive traffic and measure how many people sign up for a waitlist. This gives you hard data on market interest before you commit serious resources.

A classic pitfall is falling in love with a solution before you truly understand the problem. Effective validation forces you to obsess over the user's pain, not your product's features. This user-centric mindset is the bedrock of every great app.

For a much deeper dive into this critical first step, our ultimate guide to validating an AI app idea breaks down more strategies and frameworks.

Creating Your Product Requirements Document

Once you have solid proof that you're solving a real problem, it's time to create your blueprint: the Product Requirements Document (PRD). This isn't just bureaucratic busywork. It's a strategic document that gets everyone aligned on what you're building, why you're building it, and who you're building it for.

A well-crafted PRD is your best defense against scope creep—that sneaky tendency for a project's requirements to expand until it becomes an unmanageable beast. It ensures every feature you build ties directly back to solving the core user problem you found during validation.

Your PRD should clearly define a few key things:

- User Personas: Detailed profiles of your ideal customers. What are their goals, motivations, and pain points?

- Feature Prioritization: A ranked list of features, focusing laser-tight on the core functionality needed for an MVP (Minimum Viable Product).

- Success Metrics: How will you know if you're winning? Define the Key Performance Indicators (KPIs) like user engagement, retention, or conversion rates that will spell success.

Think of the PRD as the single source of truth for your entire project. It's a living document that guides your dev team, keeps stakeholders in the loop, and makes sure you're always building the right thing from day one.

Choosing The Right Tech Stack For Your AI App

The tech choices you make right now will have a huge impact on your app's future. They'll dictate how fast you can build, how well your app scales, and what it costs to keep the lights on. Putting together a modern AI tech stack, especially for a GPT Wrapper app, is all about picking tools that play nicely together to deliver a smart, snappy, and reliable experience.

Think of it in layers. The front-end is everything your user sees and touches. The back-end is the engine room that handles the heavy lifting and talks to other services. And the AI model is the brain doing the actual "thinking." Getting these three layers right is the foundation of building an AI app that won't fall over the moment it gets popular.

Front-End And Back-End Foundations

For the user-facing side of things, frameworks like React and Next.js are the industry standard for a reason. They help you build dynamic, responsive interfaces that feel modern and quick—which is absolutely essential for keeping users from bouncing. Next.js is a particularly strong choice, as it offers server-side rendering and static site generation right out of the box, giving you a serious performance and SEO boost from day one.

On the back-end, your code will be managing data, handling user accounts, and making API calls to your chosen Large Language Model (LLM). Node.js is a super popular option here because it's fast and uses JavaScript, meaning your developers can use a single language for the entire stack.

Of course, Python is still a titan in the AI world, with incredible libraries and frameworks like Django and Flask that are built for complex data work.

The truth is, artificial intelligence is completely changing what users expect from an app. By 2025, we'll see AI-driven apps that adapt to user behavior on the fly, a huge leap from today's static, rule-based functions. We're already seeing this with embedded machine learning models that analyze user data to deliver better search results and personalized content.

Navigating The World Of LLMs

The heart of your AI app is the Large Language Model. This is where you'll spend a ton of time experimenting and tweaking. The decision here is a constant balancing act between raw power, cost, and how complicated it is to work with.

Picking the right model is a crucial early decision. Here's a quick rundown of the major players to help you figure out where to start.

Comparing Popular LLMs For Your AI App

| Model | Best For | Key Strengths | Considerations |

|---|---|---|---|

| OpenAI GPT-4 | Complex reasoning, high-quality text generation, and general-purpose tasks. | Widely considered the gold standard for versatility and powerful creative and logical capabilities. | Can be more expensive than other options; performance can vary between updates. |

| Anthropic Claude 3 | Analyzing long documents, enterprise applications, and tasks requiring high safety. | Massive context window (up to 200K tokens) makes it fantastic for summarizing books or codebases. | Often seen as slightly less "creative" than GPT-4 but excels at professional tasks. |

| Google Gemini | Multimodal applications that involve text, images, and video. | Natively built for multimodality, offering strong performance in understanding different types of media. | Still a newer player, so the developer ecosystem is catching up. |

| Open-Source (Llama 3, Mistral) | Cost control, customization, and running on your own infrastructure. | Offers total control over the model and can be significantly cheaper for high-volume use cases. | Requires technical expertise to host and maintain the infrastructure yourself. |

Ultimately, the best LLM is the one that fits your app's specific needs and budget. Don't be afraid to start with one and switch later if a better option comes along.

Your choice of LLM is not set in stone. A smart move is to build your app in a modular way that lets you swap out models as new, better, or cheaper ones hit the market. Pick one that gets your MVP out the door and be ready to adapt.

Integrating these models is easier than ever. This screenshot from OpenAI's website shows just how clean and simple it can be to get started with their API.

This kind of clean documentation and straightforward access is what allows developers to start building an AI app incredibly fast.

Choosing Your Database And Hosting

Finally, you need a home for your data and a place to run your app. For databases, a flexible NoSQL option like MongoDB or a managed service like Firebase Firestore or Supabase is perfect for early-stage apps. They adapt easily as your data structure evolves, so you don't get locked in too early.

When it's time to go live, platforms like Vercel and Netlify provide a fantastic developer experience. They integrate seamlessly with frameworks like Next.js and automate your deployments, saving you a ton of headaches.

For more complex projects or if you need granular control, the big cloud providers like Amazon Web Services (AWS) or Google Cloud Platform (GCP) offer all the power and scalability you could ever need.

If you need a hand making these critical decisions, be sure to check out our complete guide on tech stack recommendations for your next project.

Building Your Minimum Viable Product

With a validated idea simmering and your tech stack chosen, it's time to actually bring your vision to life. This is where the code hits the road and your app takes its first real steps.

But let's be clear about the mission here. We are not trying to build the perfect, feature-loaded product right out of the gate. The goal is to create a lean and focused Minimum Viable Product (MVP). Your MVP solves one core problem exceptionally well, and that's it.

This whole approach is built for speed and learning. By zeroing in on a single, crucial feature for your initial launch, you get your app into the hands of real users as fast as humanly possible. Their feedback is gold—it's the most valuable resource you have for guiding your next steps and making sure you're building something people actually need and will pay for.

Adopting an Agile Mindset

The agile development mindset is your best friend during this phase. It's all about embracing an iterative loop of building, testing, and learning in small, manageable cycles. Forget about disappearing into a cave for months to emerge with a "finished" product. Instead, you'll work in short sprints, focusing on delivering tangible value with each one.

This method keeps you tethered to reality and closely aligned with user needs. The core idea is simple: launch a functional but minimal version, see how people use it, gather insights, and then use that data to decide what to build next. It's your best defense against wasting time and money on features nobody asked for.

"We continue to believe that the best way to make an AI system safe is by iteratively and gradually releasing it into the world, giving society time to adapt and co-evolve with the technology, learning from experience, and continuing to make the technology safer."

This iterative process doesn't just validate your product; it de-risks the entire development journey, one small step at a time. To see how this works in the real world, check out our breakdown of successful Minimum Viable Product examples.

Setting Up Your Development Environment

Okay, time for the first practical step: getting your development environment configured. This is where you'll install the tools, libraries, and frameworks you picked out earlier. A pretty standard setup for a GPT Wrapper app would include:

- Code Editor: A workhorse like VS Code is essential for writing and managing your code.

- Version Control: Using Git and a GitHub repository is non-negotiable for tracking changes and collaborating.

- Framework CLI: You'll use the command-line interface for your chosen framework (like

create-next-app) to get your project structure up and running in minutes. - API Keys: Keep your LLM API keys safe and sound. Store them securely using environment variables so they never get exposed in your code.

Once your environment is ready, you can start wiring up the front-end to your back-end logic. This is the foundation for your app's core functionality.

Making Your First API Call

This is it—the magic moment. Making that first successful API call to your Large Language Model is when your app starts to feel truly intelligent. Your back-end server is responsible for taking a request from your front-end, packaging it with your API key, and shooting it off to the model's endpoint.

The process usually breaks down like this:

- User Input: The front-end captures what the user wants (e.g., text from a form).

- Server Request: The front-end sends that data over to your back-end server.

- API Call: Your server builds a formal request to the LLM API, including the user's input and any custom prompts you've engineered.

- Response Handling: The server gets the AI-generated response back, cleans it up if needed, and sends it back to the front-end to be shown to the user.

The Art of Prompt Engineering

Getting consistent, high-quality responses from an AI isn't just luck; it's a skill called prompt engineering. It involves carefully crafting the instructions you give the model to guide its output toward what you actually want. A vague prompt will get you generic, unpredictable results. A specific, well-structured prompt can produce exactly what you need, every time.

This is so much more than just asking a question. It's about providing context, setting constraints, and even giving examples.

For instance, don't just ask an AI to "write a social media post." That's a recipe for bland content. A much better prompt would be: "Write a 280-character Twitter post for a B2B SaaS company announcing a new feature called 'Analytics Dashboard'. Use a professional but engaging tone and include the hashtag #SaaS."

This level of detail is what separates a gimmick from a reliable AI app. The quality of your prompts has a direct impact on the user experience and the real-world value your product delivers.

Launching and Monetizing Your AI App

You've built a functional MVP. That's a huge milestone, but now the real work begins: getting it out into the world and turning it into an actual business.

Launching your AI app is way more than just flipping a switch. It's about making your creation accessible, reliable, and ready to grow. And beyond that initial push, you absolutely need a clear plan for making money to keep the lights on and scale your efforts.

Thankfully, the right deployment platform can make this part almost effortless. Modern services are designed to get your app live in minutes, not days, so you can focus on your users instead of wrestling with server configurations.

Deploying Your App to the World

Getting your app online should be fast and painless. Platforms like Vercel and AWS Amplify have completely changed the game here, offering seamless integration with the tools you already use. You just connect your GitHub repository, and with a few clicks, your app is deployed across the globe.

This approach is known as Continuous Deployment. Every time you push a code update to your repository, your live app is automatically rebuilt and deployed. It's a massive time-saver that drastically reduces the chance of manual errors. This lets you ship new features and bug fixes to your users almost instantly, keeping your momentum high.

The screenshot below from Vercel's homepage nails this concept. They emphasize a smooth, fast developer experience.

Their whole "Develop, Preview, Ship" mantra is the core loop of modern web development. It's what allows you to iterate quickly and stay ahead.

Monitoring Performance and Managing Costs

Once your app is live, two things become critically important: keeping an eye on performance and managing your API spending. As users start pouring in, you need to know how your app is holding up.

Monitoring tools will help you track key metrics like:

- Server Response Times: Are your users experiencing frustrating lag?

- Error Rates: Are there hidden bugs in your code that need squashing?

- API Usage: How many calls are you making to your LLM, and what's it costing you?

That last point is absolutely crucial for an AI app. Unchecked API usage can lead to shocking bills that can sink your project before it even gets off the ground. You have to implement cost-control measures from day one. Set hard spending limits in your provider's dashboard and add rate limits in your code to prevent abuse.

A common rookie mistake is launching without a cost management strategy. Your API spend is a direct operational cost, so you need to monitor it as closely as you monitor user sign-ups.

This proactive approach is what keeps your app both functional and financially viable as you grow.

Choosing Your Monetization Model

Now for the fun part: how are you going to make money? The right monetization model aligns with the value you provide and what your target audience is willing to pay. For AI apps, a few proven strategies work exceptionally well.

The most popular models are:

- Subscription (SaaS): Users pay a recurring monthly or annual fee for access. This model gives you predictable revenue and is perfect for tools that offer ongoing value.

- Freemium: You offer a basic, free version of your app to attract a large user base, with an option to upgrade to a paid plan for advanced features or higher usage limits. This is a great way to let users see the value before they commit.

- Pay-Per-Use (Credit System): Users buy credits that they spend on specific actions, like generating an image or summarizing a document. This model directly ties cost to usage, which feels fair to users and protects you from covering heavy API costs for inactive customers.

Each model has its pros and cons, so think carefully about which one best fits your app's specific function and your users' needs.

Scaling Your Infrastructure

As your user base grows, so will the demands on your infrastructure. The beauty of using modern cloud platforms is that scaling is often built-in. Services like Vercel's serverless functions or AWS Lambda automatically scale to meet traffic spikes without you ever having to manually manage servers.

This elastic infrastructure means you can go from ten users to ten thousand without your app slowing down. It frees you up to focus on building new features and marketing your product, confident that your technical foundation can handle the success. This is how you build an AI app that lasts.

Common Questions About Building an AI App

As you start sketching out your AI app, the questions will start piling up. It's unavoidable. So, let's tackle some of the most common ones I hear from founders and developers, covering everything from budget and timelines to the real-world hurdles you're likely to face.

How Much Does It Cost to Build an AI App?

There's no single price tag here—the cost to build an AI app varies wildly depending on its complexity and your approach.

A lean and mean MVP, especially a "GPT Wrapper" put together with no-code tools or by a single developer, could come in at just a few thousand dollars. If you're aiming for a more polished, market-ready product built by a small team, you're more likely looking at a range of $25,000 to $75,000. The biggest cost drivers are pretty standard: development hours, UI/UX design, and cloud hosting. But the one you really have to watch is the ongoing API costs from your LLM provider.

The smartest financial move you can make is to start with a bare-bones MVP. Get it into the hands of real users to validate your idea and gather feedback before you commit to a bigger budget for a full-featured product.

Now, if your big idea requires a completely custom-trained AI model built from the ground up, the costs can easily rocket past $100,000. This path is really only for highly specialized apps where off-the-shelf APIs just won't cut it.

Do I Need to Be a Machine Learning Expert?

Nope. Not anymore. The barrier to entry for building AI apps has absolutely plummeted.

Thanks to powerful and well-documented APIs from companies like OpenAI, Anthropic, and Google, you can plug world-class AI capabilities directly into your product. If you have solid web or mobile development skills—say, you're comfortable with JavaScript or Python—you can tap into state-of-the-art AI through simple API calls.

This shift is huge. It frees you up to focus on what actually makes or breaks a product: creating a killer user experience and solving a real, specific problem for your audience. You can concentrate on the product, not the underlying AI research.

What Are the Biggest Challenges?

While the technical side is more accessible than ever, building a successful AI app still comes with its own unique set of headaches. Knowing them upfront can save you a world of pain later.

Here are the most common hurdles you'll run into:

- Prompt Engineering: Getting consistent, high-quality output from an LLM is more art than science. It takes a ton of testing, refining, and a deep understanding of how to phrase instructions to guide the model effectively.

- Managing API Costs: This one can sneak up on you. API usage can get very expensive, very fast, especially as your user base grows. You absolutely have to build in rate limits, caching, and cost monitoring from day one to avoid a surprise bill that could sink your project.

- Handling AI Unpredictability: LLMs can "hallucinate" or just plain get things wrong. It's on you to build safeguards, validation layers, and clear UI to manage this unpredictability and keep your users' trust.

- Market Differentiation: The AI app market is getting crowded, fast. Real success comes from finding a specific niche and delivering a focused, superior user experience—not just building another thin wrapper around a popular API.

How Long Does It Take to Build an AI App MVP?

With today's tools and APIs, you can get a Minimum Viable Product out the door much faster than you might think.

A skilled solo developer or a small, focused team using a modern framework like Next.js and an LLM API can often launch a functional MVP in 4 to 12 weeks. This timeline assumes you're ruthlessly focused on the single core feature that delivers immediate value to your first users.

The whole game is to launch quickly, get your product in front of real people, and start learning from their feedback. That iterative loop is infinitely more valuable than spending six months building a "perfect" product in isolation, only to find out you missed the mark. Speed is your biggest advantage at this early stage.

--- Ready to stop wondering and start building? GPT Wrapper Apps gives you validated, market-ready AI app ideas complete with detailed Product Requirements Documents (PRDs). Skip the brainstorming and get straight to building a profitable product. Find your next project at GPT Wrapper Apps.

Found this helpful?

Share it with others who might benefit.

About Gavin Elliott

AI entrepreneur and founder of GPT Wrapper Apps. Expert in building profitable AI applications and helping indie makers turn ideas into successful businesses. Passionate about making AI accessible to non-technical founders.